Intended Audience

This document describes SAFR Clustering and other advanced deployment strategies available with SAFR. Please be aware that these solutions require specialized support and should not be considered without consulting with SAFR Support to validate design and ensure you have the right support plan to cover these advanced features.

Hardware vs. Software Processing

Clustering applies to both hardware-based (SAFR Camera and SAFR SCAN) and Software-based processing. But is typically clustering is only needed for Access Control when failover is required. For Software deployments, clustering can provide both redundancy/failover and horizontal scalability.

Configuring Load Balancing

SAFR Servers can be manually enabled or disabled to accept load balancing traffic.

Note: If the server you want to disable is the only one configured to take traffic, you receive a warning and prompt to continue. In this case, should you proceed, your system will most likely go offline.

Disable Load Balancing Traffic

To stop receiving traffic on a server, log in to a shell on the server and run the appropriate command for your server's OS:

|

OS |

Command |

|

Windows |

"C:\Program Files\RealNetworks\SAFR\bin\server-status.py" --disable |

|

Linux |

sudo /opt/RealNetworks/SAFR/bin/server-status.py --disable |

It may take up to one minute for the desired traffic state to change.

Enable Load Balancing Traffic

To resume receiving traffic on a server, log in to a shell on the server and run the appropriate command for your server's OS:

|

OS |

Command |

|

Windows |

"C:\Program Files\RealNetworks\SAFR\bin\server-status.py" --enable |

|

Linux |

sudo /opt/RealNetworks/SAFR/bin/server-status.py --enable |

It may take up to one minute for the desired traffic state to change.

Upgrading Server Clusters

See the Upgrading a SAFR Server Cluster documentation for details on upgrading your SAFR Servers to the latest version.

Conclusion

SAFR Platform provides and easy to deploy and manage enterprise class solution for a highly available system. SAFR accomplishes this thru a combination of data redundancy, failover, horizontal scalability, and vertical scalability. SAFR makes it easy to manage clusters or servers that form a comprehensive highly available system. Please review SAFR Documentation for more information on SAFR Clustering and how to deploy such a system.

Definitions

First, we’ll take a moment to define the key capabilities of SAFR Clustering.

Data Redundancy

Protecting against data loss is a critical component of any security or business critical application. SAFR offers a real-time fail-safe measure against data loss thru its server clustering feature with redundant data joins. Data is maintained on redundant servers and kept in sync through a real-time synchronization process. The degree of protection can be controlled by specifying the number of nodes in a SAFR cluster that maintain data.

Failover

Ensuring continuous operation is essential for a security or business critical application where interruption of service could mean missed threats or gaps in data. SAFR supports the ability to maintain 24x7x52 continuous operation via its highly available clustering of servers. By design, any one node in a SAFR cluster can take over the responsibilities of another node in the cluster. Sessions are stateless in that any one request can go to any server in the cluster. To this end, SAFR can provide any variation of N+M failover.

Horizontal Scalability

System load cannot always be known ahead of time. A business must be able to adjust as demand for a given service grows. SAFR can scale dynamically with demand, adding capacity when higher demand requires it or reducing capacity when not needed. In this way SAFR optimizes system costs.

Vertical Scalability

Enterprise class systems must be continuously operational for a desirably long period of time to ensure no interruption of essential business functions. High availability architecture is an approach of defining the components, modules, or implementation of services of a system which ensures optimal operational performance, even at times of high loads. SAFR is designed from the ground up to offer continuous availability by scaling its processing with system loads.

Data vs. Objects

Through much of this whitepaper, we will refer to binary or non-binary data. We will use the following definitions to distinguish between the two.

- Data – Term to refer to the metadata and biometric signatures we use to store a person identity and events. Data is stored in a database.

- Images – Term used to refer to objects, though almost entirely composed of images. Images are stored on disk. We may also refer to this as object storage.

Benefits of SAFR Clustering

SAFR offers data redundancy, failover and horizontal scalability by combining one or more servers into a cluster. A SAFR cluster acts as a single unified system sharing a common brain and data source. All nodes are capable of carrying out any transaction made to the system. Any single node can be taken offline without loss of data or functionality.

SAFR uses vertical scalability to ensure that sudden spikes in system load does not lead to a catastrophic failure of the system. It accomplishes this by dynamically scaling demand and spreading resources across all clients evenly when overall load exceeds system resources.

Data redundancy

SAFR manages two types of data as defined above: images and data (metadata). Images are stored on disk and managed thru the CVOS (Computer Vision Object Storage) service. Data is stored in a NoSQL database and managed thru the SAFR database service. SAFR uses MongoDB, a popular NoSQL database for its data storage.

Image storage redundancy is achieved thru varies techniques. The most scalable of these methods is where SAFR gives control to an external data storage system to implement data redundancy and backup procedures. In this way, enterprises can offer the best fit solution that balances cost and data loss impact. These methods are described below.

Metadata redundancy is achieved by distributing identical copies of its data across multiple nodes. Data is replicated in real-time across all nodes with sub-second synchronization rates. In doing so, any one node can be brought offline suddenly with no loss in data. While distributing data in this manor will result in replicating storage costs across multiple nodes, less expensive non-redundant storage can be leveraged, and redundancy can be limited to a subset of nodes in the cluster.

Failover

A common high availability design pattern is to use multiple redundant nodes, all of which can perform the same role equally. SAFR applies this design pattern by implementing servers that perform near-atomic actions against a common shared data set. Each server can accept any request. Clients maintain whatever state that is required, and all sessions are stateless. In this way, one or more server nodes can fail without impacting the cluster’s operation.

Horizontal Scalability

SAFR can be easily scaled to handle higher loads by adding additional nodes. Because each node performs the same function, adding capacity is simply a matter of joining more servers into the cluster.

New nodes can be simple joins (compute node only) or redundant nodes (compute plus data storage). Simple joins are ideal for dynamic scaling scenarios where additional capacity is needed to handle short term spikes in load and may also be suitable in cases where additional data redundancy is unneeded.

Vertical Scalability

While SAFR Clustering itself offers a degree of high availability, we must consider a scenario where the ability of clustering to sustain failure of one or more systems may not protect from sudden spikes in system load. In a clustered environment, a spike in load will impact all servers and thus requires an alternate mechanism to protect against downtime. And even in cases where a single node goes offline, remaining nodes, if already near system capacity, need to be able to absorb the extra demand without resulting in downtime.

SAFR accomplishes vertical scalability by dynamically adjusting the effort it expends on any one activity so as to maintain an acceptable level of service for all activities. This is accomplished differently for both detection and recognition.

Detection is the process of finding faces and objects in video. SAFR does this by scanning every frame of the video, performing detection of objects from frame to frame. If system becomes overloaded, SAFR is able to reduce the frame rate to dynamically scale load of all video feeds to within system constraints. In this way, detection is successfully maintained on all video feeds except in extreme overload conditions.

Recognition is the process of matching a face to a known identity. Recognition is a resource intensive process that must be applied hundreds of thousands of times per second against many faces thru many cameras. When SAFR becomes overloaded, a delay between recognition attempts is applied evenly and gradually across all faces. The slowing of recognition attempts will not have a significant impact on ability for SAFR to perform recognition except in extreme cases of system overload.

Understand When to Scale

At some point, your SAFR system’s capacity and/or performance may degrade if the overall load exceeds a single system’s capacity. One solution to this problem is to increase the capacity of the server on which SAFR is running. But this solution will only scale so far until the cost of the machine becomes prohibitive or other bottlenecks arise such as I/O or compute capacity. And a scaling strategy dependent upon replacing expensive servers is inefficient.

Fortunately, you can install additional SAFR Servers on other machines to increase your SAFR system’s capacity, improve performance, and improve resiliency. The first SAFR Server you install is automatically your primary server, while all additional servers are secondary servers.

By clustering you get a unified system in which load is shared across multiple nodes. The remainder of this section describes the conditions that drive overall system load and performance bottlenecks.

Number of Faces

The number of face recognition requests sent to your SAFR Server is one of the major contributors to server load. The rate of face recognitions is itself driven by the number of cameras and how many faces are at each camera. As described above, SAFR can handle short term bursts but in general a system should be capable of handling the number of faces that will appear at peak traffic time. The recognition rate is a factor of the number of cameras multiplied by the number of faces per camera. This, high traffic scenarios such as train stations can result in very high system load, even at moderate camera counts.

Database Size

Performance problems may also arise if the number of people in your Person Directory becomes too large. As the number of people in the Person Directory increases, another multiplier effect comes into play – each face that is recognized must be searched against all faces in the database. Databases that exceed 100,000 identities begin to have a significant impact on load, especially at high face counts.

Other factors

Many other factors can contribute to system load. These include camera resolution, required analytics (age, gender, sentiment, mask detection, liveness, person body, badge, etc) and frame rate. These factors will often drive the balance between GPU and CPU. The more analytics typically increase the demand on GPU. Some analytics like person-body detection can impose significant demand on GPU resources.

Video resolution above 4k can also significantly increase GPU demand because this is the resolution where video decode resources start to outpace the recognition resources on the GPU.

Another possible performance bottleneck is the network throughput of the primary server. While not as network intensive as Video Management Systems (VMS), SAFR transmits images for recognition and display in events requiring both inbound and outbound network consumption on each server. This must be accounted when designing a system.

Single Server Capacity

The capacity of a single SAFR server can vary significantly. A server is typically sized for a specific use case. The more analytics required the lower the CPU/GPU ratio. In these cases, high GPU capacity systems are ideal to produce the lowest TCO (Total Cost of Ownership).

Systems with low analytics requirements and low or medium traffic typically will have one or two GPUs and cost is primarily driven by server hardware. Such systems would be face recognition only or demographics (age, gender, and sentiment) and typically be used for surveillance (many cameras low density) or low volume retail. Systems of this nature may have relatively inexpensive CPUs such as Xeon Silver 4214 and equally inexpensive GPUs such as RTX A4000. Examples of the most cost-effective chassis for these configurations would be Dell Precision 3930 or 7920. Costs of these systems can range from $4000 USD to $8000 USD and have capacity for 30 – 40 cameras per system with low face counts or 20 cameras with medium face counts.

Systems with more analytics or high traffic will require multiple GPUs and more expensive CPUs. An example of this is a busy train station or performing occupancy counting (two directional traffic counting with single camera) in addition to watchlist. Such a system may require more expensive Xeon Gold CPUs and Tesla L40 or RTX A5000 class GPUs. Due to increased GPU requirements, more expensive chassis such as Dell 740 are required which can host up to 3x RTX A5000 class full size GPUs or 6x low profile GPUs such as Tesla A10. Due to the higher cost CPU and GPUs, the systems can range from 10k to 25k USD per machine.

Hardware estimation Tools

While many factors need to be taken into consideration in order to select the optimal system, SAFR has tools available to facilitate this process. Please discuss with your SAFR Presales Engineer or Account Manager for the Hardware Sizing Quick Reference for common applications or customize hardware quotes for more advanced applications.

Multiple Server Scaling Architectures

Further down in this whitepaper we will discuss the trade-offs between different clustering architectures and which machine classes lend themselves to optimal TCO and deployment flexibility.

About SAFR Clustering

Primary vs Secondary Nodes

Every SAFR cluster has a single Primary node. The primary node is responsible for acquiring and distributing a license, configuration of secondaries and can be used as a software load balancer.

Secondary servers are joined to the primary server when building a cluster. While generally secondary servers are of the same configuration as the primary, this is not required. Though if not of same capacity, special rules may need to be applied to load balance between different class machines.

There are two types of secondary servers as defined by how they are joined to the cluster.

- Simple Joins – Performs compute only and does not replicate data

- Redundant Join – Performs both compute and data replication

It is also possible to configure a joined server to perform data replication only and not compute. This may be useful in very large clusters to optimize systems for database vs. compute.

Data writes vs. data reads

When discussing failover, it is useful to understand how SAFR handles writes vs. reads on its recognition and events database. Writes are only performed against the primary. Data is then replicated to the secondaries but only the primary is able to accept new identities or store new events. Reads on the other hand are done across any secondary server set up as a redundant join.

Database Redundancy

The first SAFR Server you install will automatically become the primary server. All subsequent servers you install will be secondary servers. There are two types of secondary servers:

- Simple: Does not replicate database data.

- Redundant: Replicates database data from the primary server, possibly providing failover functionality. (See the Failover Functionality section below for details.)

With both types of secondary servers services such as feed management, reports, and the Web Console are not load-balanced and are always served from the primary server.

Failover Functionality

If there are at least two redundant secondary servers (three servers total), failover functionality is enabled. This means that if the primary server goes offline and both of the first two installed redundant secondary servers are still online, one of the redundant secondary servers will become the new primary server and the server cluster will continue to function as normal.

If additional redundant secondary servers are installed beyond the first two, database data will be replicated on them, but they don't count for the purpose of failover functionality.

Object Storage Redundancy

Note: Object Storage Redundancy is only available on Windows and Linux.

The Object Storage Service is used for storing objects, such as profile and event images, as well as ephemeral data, such as event reply messages.

The service can operate in a redundant configuration when you have multiple SAFR servers running. All redundant secondary servers are load-balanced by the primary server for all Object Storage Service requests it receives.

Shared Object Storage (Network Storage)

Using shared object storage provides a shared location for each server to save and retrieve objects from. This provides each Object Storage Server with access to all of the objects, rather than just objects saved to their local storage.

Shared storage also provides an easier backup process, as you only have to run it from the primary server.

Local Object Storage (Not Recommended)

By default all redundant servers will save objects locally, and ask other Object Storage Servers for objects it does not have locally.

When you're using local object storage, you will lose access to all objects that are only stored by an offline Object Storage Server until the server becomes healthy again. If that server's objects are lost, and you do not have backups, they will be unrecoverable.

Backups must be run on every redundant server that has Object Storage enabled.

Object (Image) Storage

There are two types of object storage defined by how where images are stored.

- Local – Each node in cluster stores images on local disk and records location (which node) of the image into the database. Images are evenly distributed across all nodes in the cluster. Images stored on any one node are unavailable if that node is offline.

- Shared (Network) – Images are stored on a networked file share (NFS, SMB or SAN). All servers read and write from the same common storage location. Images are available independently of the availability of any one node in the system.

Shared (Network) storage is strongly recommended over local image storage.

Backup and Restore

While not specifically required for high availability, we would be remiss if we were to not say a couple of words about backup and restore.

For SAFR clustering, because of database redundancy, only the primary server database needs to be backed up unless local object storage is used. Scripts exist to create backup of the database. A backup can be restored onto the SAFR Primary server which in turn will sync its data to the secondaries in the cluster.

Backup of secondary servers is not required unless local object storage is used. This would be redundant to a backup of the primary server. If local object storage used, then backup only objects on each secondary server.

Backup can include images or not. Because images take up the majority of space, the backup can be quite large and it may take many hours or days generate and restore backups that include images. A good strategy is to store images on redundant drive and use technologies like rsync or cloud backup services to back up your images and omit images from the SAFR backup. In this way the SAFR backup will be far quicker and consume much less space.

More details on deployment best practices can be found at https://support.safr.com/en/support/solutions/articles/69000802190-safr-deployment-best-practice.

Load Balancing Deployment Architectures

There are three different load balancing configurations you can choose from.

SAFR offers a number of deployment models that support load balancing to various degrees. The table below summarizes the different deployment types. The subsequent sections describe each of these in further depth.

|

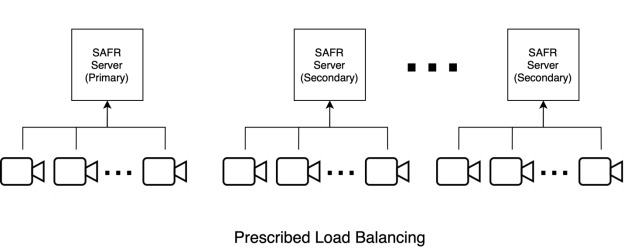

Prescribed Load Balancing |

Cameras are connected to Desktop Clients or Video Recognition Gateway (VIRGO) video feeds running on the same machines that are hosting your SAFR Servers. This gives you tight control over how your face recognition load is distributed, since the video feeds’ face recognition requests are processed on the same machine where the video feeds are connected. |

|

|

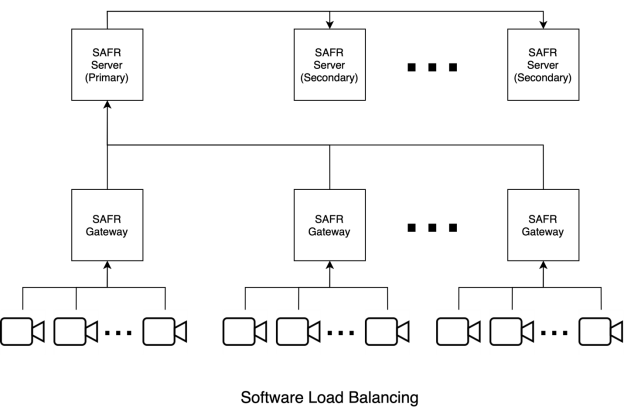

Software Load Balancing |

In this configuration the machines hosting SAFR Servers do not also have cameras connected to them. All face recognition requests are initially sent to the primary server, and the primary server acts as the load balancer for the server cluster. |

|

|

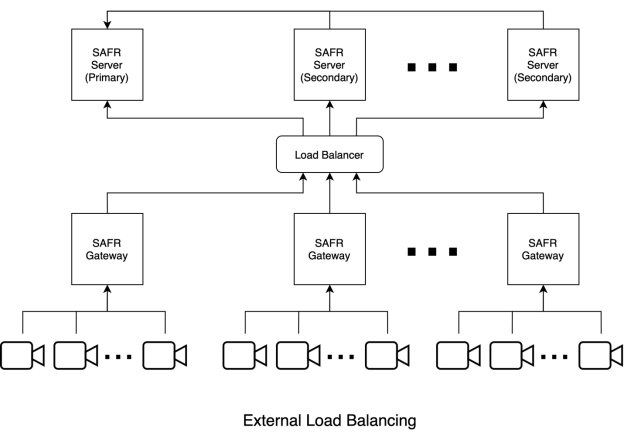

External Load Balancing |

In this configuration all face recognition requests are directed at one or more external load balancer(s), which handle load balancing duties for the SAFR system. |

|

Prescribed Load Balancing Configuration

In the prescribed configuration, you run multiple SAFR Servers by connecting cameras to Desktop Clients or VIRGO video feeds running on the same machines that are hosting SAFR Servers. In this way, you have tight control over which servers take the video feed load. This is also a useful configuration for systems with very low video feed count totals where running a Desktop Client on a separate machine from the SAFR Server would take more resources than are required for the given use case.

The following diagram illustrates this configuration:

Most services (e.g. face service, events, and reports) are performed on the server where recognition requests are sent.

The system requirements of the Desktop Client need to be combined with those of SAFR Server when calculating the system requirements for a machine hosting the SAFR Server and Desktop Client. Because detection takes considerable GPU, systems using Prescribed Load Balancing have a higher CPU:GPU ratio and tend to require 3 or more GPUs to meet requirements.

Software-Based Load Balancing Configuration

In the software-based load balancing configuration, cameras aren’t connected to machines running SAFR Servers. When newly installed secondary servers are configured, they check in with the primary server and announce that they’re ready to receive load-balanced traffic. All recognition requests go through the primary server. which balances the load among itself and all other servers in the SAFR system. The following illustration demonstrates this setup:

External Load Balancing Configuration

The software-based load balancing configuration has the limitation that the primary server is a single point of failure. All traffic is routed through the primary server before any traffic is redirected to the rest of the servers. If the primary server is down, all traffic will stop. External load balancing is an alternate configuration that can be used to provide a more robust setup that can better deal with server failure.

When using an external load balancing configuration, all network traffic is first routed to one or more load balancer(s), and the load balancer(s) proxy requests to the backend servers over either HTTP or HTTPS. HTTP would be OK in situations where network traffic is isolated to a trusted network, or when network sniffing by non-target hosts is impossible.

Note: External Load Balancer may be software or hardware based. Software Load Balancing implemented by SAFR Primary Server is different than software-based load balancing external to SAFR.

If HTTPS is used to proxy traffic to SAFR servers, you should manually disable load balancing on all secondary servers as described below so that the primary server isn’t double load balancing traffic to them. A valid (i.e. non self-signed) SSL certificate would still need to be installed and configured on the primary server. Secondary servers should be fine with the default (i.e. self-signed) certificate, if your load balancer allows it.

Failover and Redundancy

Redundancy

Most functions in SAFR are designed to allow the same activity to be run on any node. In this way SAFR’s ability to handle sudden loss of any one server is handled simply by directing requests to alternate servers. In some Load Balancing modes this is automatic and in others its manual.

Failover

Some functions of SAFR require a central coordination. These functions vary depending on the type of load balancing deployed. The following table summarizes failover and redundancy for each of the load balancing methods.

Redundant/Failover by Function

The key functions that must be distributed to ensure continuous operation of the system are:

- Video Feed Processing – Video feeds are assigned to a specific processor (machine). If a processor fails, the feed must be re-assigned to a new processor. Methods for automating feed failover are available via REST APIs.

- Recognition/Analytics – Recognition or other analytics (age, gender, sentiment) are performed as an atomic stateless activity. An image is submitted to any server, a model is applied, and matching is performed if needed and response is returned. These operations are complete within a few milliseconds.

- Data writes – Data writes (to database) are needed for creating or updating identities and events. All writes must occur against the primary database instance. The write responsibility is not redundant but can failover from one instance to another in event of failure of the write instance.

- Data reads – Data reads (from database) are needed for reading identities, performing matches, and reading events. Because all data is replicated on all nodes, reads are redundant and can occur on any server.

- Image reads/writes – Image reads/writes are dependent upon external storage array. Storage array should be a highly available system with redundancy to ensure end to end redundancy

- Events – Events are obtained thru the event service which runs on each SAFR server node. Thus, the event service is redundant. The event service however is dependent upon availability of both the images and data read redundancy and failover capabilities.

- Reports – Like events, reporting service runs on each node and is redundant but generation of reports is dependent upon availability of the data and images.

The following table summarizes the redundancy and failover behavior for applicable actions.

|

Redundancy |

Failover |

|

|

Data reads |

||

|

Image read/write 1 |

||

|

Events |

||

|

Reports |

- Image reads/write redundancy is dependent upon external storage array. Storage array should be a highly available system with redundancy to ensure end to end redundancy

Video Feed Failover

Video feeds are connected directly to a Video Gateway. SAFR does not provide a built-in feature to support redundancy or failover of video feeds. But REST APIs exist that can report status of all feeds and programmatically move a feed from one processor to another. In this way, automation can be built that will perform automatic failover of video feeds. While redundancy is possible it is not recommended due to hardware costs and duplication of event data.

Redundancy and Failover by Load Balancing Method

This section describes the behavior of each of the Load Balancing methods as a result of a server failure. This section is intended to help the reader decide the method of load balancing preferred.

Prescribed Load Balancing Redundancy and Failover

With Prescribed Load Balancing, all cameras are connected directly to a server. Failure of a single server will result in failure of all feeds processed by that camera. But feeds connected to other servers will continue to function as normal as will recognition, events and reporting.

If secondary goes offline, the primary continues to function as normal and remaining servers will continue operation with no degradation of service.

A failure of the primary will also result in no degradation of service to the remaining servers, but the role of data writes will be transferred to one of the remaining secondaries.

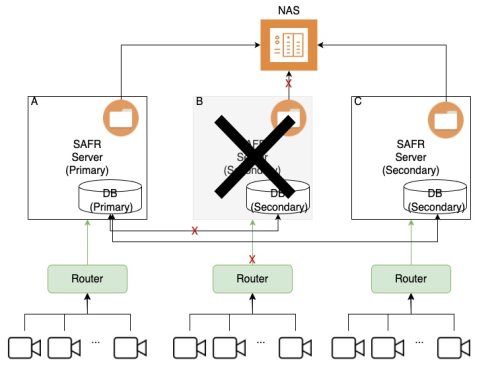

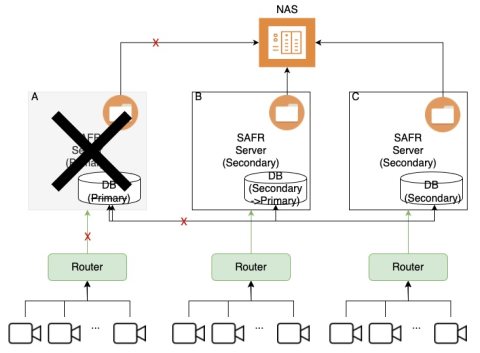

Software Load Balanced Redundancy and Failover

Because the Primary SAFR Server is performing load balancing, Software load balancing is impaired if the primary server goes offline. But failure of any of the secondaries should have no impact on remaining servers as long as remaining servers have capacity to handle the system load.

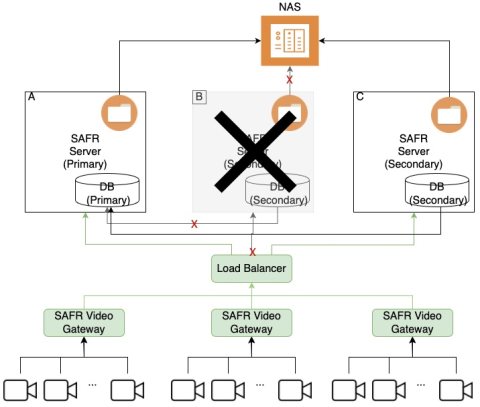

But with Software load balancing, failure of the primary results in all servers becoming unavailable as shown below.

Even though the secondaries are still functioning, they are essentially disconnected from the cameras due to the failure of the primary. The primary must be brough back online in order to restore service to normal.

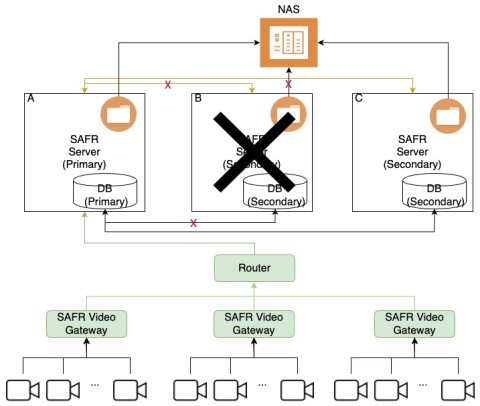

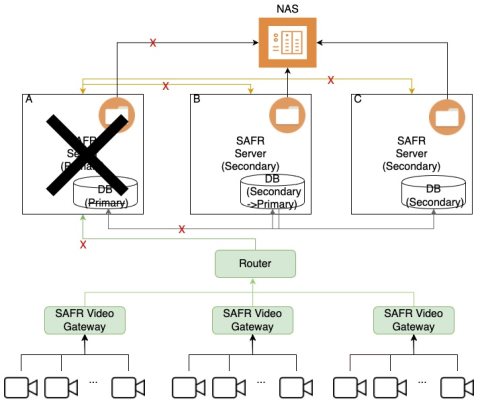

External Load Balanced Redundancy and Failover

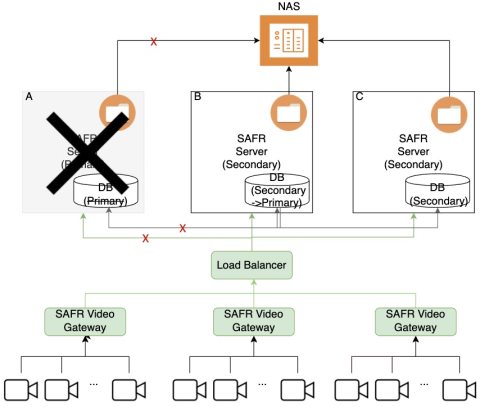

With External Load Balancing, all servers are accessible independently and can perform their roles. Unlike with software load balancing, the system will continue to function as normal if either the primary or secondary goes offline as long as there is sufficient capacity in remaining servers.

A failure of the secondary server is demonstrated below. Despite the secondary being offline, all remaining servers continue to function as normal.

If the primary server goes offline, the system still continues to function. There will be a few second pause while the primary database node is assumed by either of the remaining secondaries. In this time a few writes may fail which can be retried by the clients.

Summary of Load Balancing Modes

The table below summarizes the behavior with each of the Load Balancing Modes.

|

Mode |

Primary Offline |

Secondary Offline |

|

Prescribed Load Balanced |

|

|

|

Software Load Balanced |

|

|

|

External Load Balanced |

|

|

Deployment

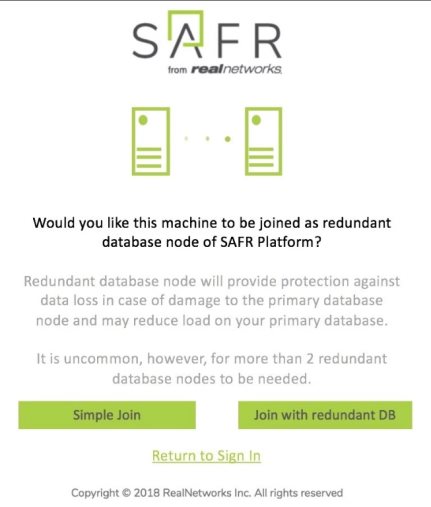

SAFR has taken all the complexity out of creating clustered server configurations. Creating a cluster is as simple as installing a 2nd server and choosing the type of join during installation.

During installation, SAFR will prompt for license credentials. Upon doing so, it connects with the SAFR License server. If the license has already been issued, the license server return the hostname or IP Address of the primary server. The installer then prompts for the type of join and performs the appropriate actions to join the cluster.

Behind the scenes, SAFR installer performs configuration of the database to add the new server to the replica set and configures other settings such as adding the new node to the Software Load Balancing configuration on the primary.

Once connected, the primary server will synchronize its data with the new server. The new server will then be part of the cluster. The same identities and events will appear seamlessly on all nodes.